Hardware checklist for an AI / ML workstation (based on your vault notes)

| Component | Minimum / Recommended | Why it matters |

|---|---|---|

| CPU | 2 × high‑core Intel Xeon Scalable or AMD EPYC (≥ 32 cores, ≥ 8 memory channels) | Parallel training and data preprocessing benefit from many threads and high memory bandwidth. |

| System RAM | ≥ 256 GB (512–1 TB for very large models / datasets) | Keeps the CPU side of GPU‑accelerated workloads fed; also useful when you need to hold multiple models or large embeddings in memory. |

| GPU(s) | • FP64‑heavy training → NVIDIA compute class (e.g., L40S, H200).

• FP32/FP16‑only work → RTX 6000 Ada or Blackwell / RTX 5090 / RTX 5080 (≥ 12–32 GB VRAM each) |

CUDA is the de‑facto standard for ML libraries; compute GPUs give double‑precision support and higher raw throughput. |

| Total GPU VRAM | ≥ 2× the amount of system RAM you have (e.g., 2 × 32 GB = 64 GB → need ≥ 128 GB RAM) | Ensures enough CPU memory for staging, mapping, and avoiding stalls when transferring data between host and device. |

| Storage | • OS / libraries: NVMe SSD ≥ 1 TB • Training datasets / checkpoints: additional NVMe (≥ 1 TB) or SATA SSD (2–4 TB). • Very large corpora: 10 GbE‑connected NAS or local HDDs. |

Fast NVMe reduces data loading times; scratch space can be useful for pipelines that spill to disk. |

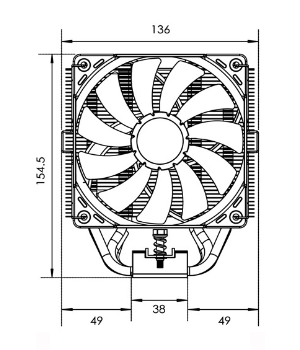

| Cooling & Power | Adequate airflow (passive cooling works for L40S/H200); PSU ≥ 1200 W (adjust per GPU count) | High‑end GPUs draw significant power and generate heat; proper cooling keeps performance stable. |

| Networking | 10 GbE NIC (or higher) for NAS/cluster connectivity | Enables quick transfer of large datasets or model shards when working in a distributed setting. |

Quick Take‑away

- CPU + RAM: 32‑core CPU, 256–512 GB RAM (up to 1 TB if your budget allows).

- GPU: Use NVIDIA compute GPUs for FP64 needs; otherwise high‑end RTX cards with ≥12 GB VRAM each.

- Memory Ratio: Keep system RAM at least twice the total GPU VRAM.

- Storage: NVMe 1 TB + extra NVMe or SATA SSD for data and checkpoints; use NAS for petabyte‑scale datasets.

These specs will comfortably support most AI/ML workloads, from large‑batch training to multi‑GPU scaling, while keeping data transfer and memory bottlenecks in check.